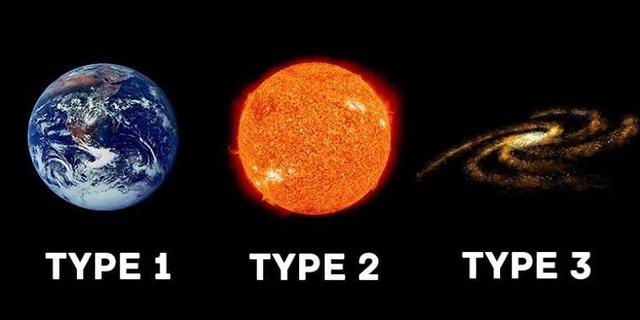

Within the Kardashev scale, a framework devised to classify civilizations by their capacity to harness energy, Type I represents the most elementary tier. Yet, humankind has not even attained this foundational level. In 1964, Soviet astrophysicist Nikolai Kardashev first proposed what came to be known as the Kardashev scale—an index designed to categorize hypothetical civilizations, including potential extraterrestrial societies, based on their technological advancement and energy utilization.

Type I Civilization—commonly referred to as a planetary civilization—denotes a society capable of fully utilizing and storing all the energy available on its home planet. In simpler terms, it is the ability to harvest the entirety of the energy that a planet receives from its host star. For Earth, this would include the total solar energy received, as well as all accessible fossil fuels and nuclear energy resources.

Type II Civilization—also known as a stellar or system-wide civilization—must command the entirety of energy output from its host star and all celestial bodies within its solar system. For humanity, this implies mastering the full potential of the Sun, which alone comprises 99.86% of the Solar System’s mass. The remaining 0.14%, including planets, moons, asteroids, and comets, is negligible in comparison. A hallmark of a Type II civilization would be the construction of a “Dyson Sphere”—a hypothetical megastructure that entirely envelops a star, capturing its entire energy output for practical use. Some theoretical models even envision concentric Dyson shells layered for maximal efficiency, ensuring not a single photon is wasted.

Type III Civilization—a galactic civilization—must be capable of controlling energy across its entire galaxy. For such a civilization, time and space may pose no mystery. It would exploit the fabric of spacetime to its limits as permitted by general relativity. Warp drives, faster-than-light travel, and the stabilization of wormholes across millions of light-years could become routine. Technologies beyond our current comprehension may emerge. Though some speculative frameworks expand this scale to as many as seven or nine tiers, Kardashev himself dismissed the feasibility of even a fourth level, let alone higher ones, believing that a Type III civilization marks the outer boundary of what is theoretically attainable.

For humanity, the transition to a Type I civilization is not optional—it is essential. Our resource demands have already exceeded both our technological maturity and the Earth’s ecological capacity. Unchecked population growth, dwindling energy reserves, and accelerating climate deterioration collectively press upon us with unprecedented urgency. As the renowned physicist and futurist Dr. Michio Kaku aptly noted: “This generation will decide whether we ascend to a Type I civilization—or perish in the flames of our own arrogance and primitive weaponry.”

Alas, we have yet to reach even the first rung of Kardashev’s ladder. Current estimates place us at a meager 0.7 on the scale. This fractional classification originates from the work of famed astronomer Carl Sagan, who in 1973 developed an interpolation formula to calculate civilization levels based on energy consumption. Using 2018 data, our civilization was placed at approximately 0.73.

To ascend to Type I, we must radically enhance the efficiency of our energy conversion technologies. Even nuclear power—a relatively advanced form—boasts a mass-energy conversion rate of only around 1%. Fossil fuels fare far worse. The key lies in transitioning from nuclear fission, which powers today’s reactors (and underpins atomic weapons), to nuclear fusion, the process that powers hydrogen bombs and the Sun itself. Fusion offers far greater energy yield and efficiency.

To reach Type I, humanity would need to sustain fusion reactions converting roughly 280 kilograms of hydrogen per second. While this may sound manageable, it remains far beyond our current capabilities. This is precisely why global efforts are being funneled into “artificial suns”—experimental fusion reactors like ITER, which aspire to replicate the Sun’s energy production here on Earth.

Even more efficient than fusion is antimatter annihilation, which theoretically achieves 100% mass-energy conversion. However, the production of antimatter remains prohibitively limited; only minuscule quantities are synthesized annually, and the energy cost of its creation far exceeds the output from its annihilation. Thus, while antimatter might serve as propulsion for future spacecraft, it is unviable as a primary energy source. Fundamentally, the principle of energy conservation prevents us from using antimatter as a net-positive power supply.

It is also worth noting that energy consumption is merely one dimension of technological advancement. While it serves as a useful indicator, it does not encapsulate the full complexity of a civilization’s scientific progress. When a society consumes energy at planetary scales, its technological capabilities inevitably surpass those of earlier eras.

Contemplating our future evokes both wonder and trepidation. Do we have reason to doubt the trajectory of our own evolution? Though we remain a 0.73 civilization, extrapolations suggest we might attain Type I status by the year 2347, assuming continued exponential growth in energy consumption. From a physical standpoint, this metric offers a compelling framework: all intelligent life requires energy to function, and as a civilization evolves, its energy demands increase accordingly. Our history—from the burning of wood to coal, fossil fuels, and now nuclear energy—traces a clear path along the Kardashev continuum.

If current trends persist, we could achieve Type II status in several millennia and Type III within a few hundred thousand years. But will the journey be so linear?

Biologist E.O. Wilson issued a prescient warning: “The real problem of humanity is the following: we have Paleolithic emotions, medieval institutions, and god-like technology.” In other words, our emotional and societal maturity lags dangerously behind the power we now wield. Should we blindly escalate our energy consumption, we may irreparably destabilize Earth’s climate, dooming ourselves before ever reaching Type I.

Some theorists believe this very dilemma explains the Fermi Paradox—the unsettling silence of the cosmos. Perhaps countless civilizations have self-destructed before completing their transition to higher Kardashev levels, victims of their own unrestrained advancement.

We must ask ourselves a fundamental question: since the dawn of civilization, has humanity truly “evolved”? Roughly ten millennia ago, primitive humans emerged from the shadows of savagery, ushering in the first sparks of civilization—pottery, script, metallurgy, slavery, monarchy, the Industrial Revolution, capitalism, socialism… These fragments form the mosaic of human history.

Undeniably, civilization has advanced—most conspicuously in technology. But does this leap in technical sophistication signify a true evolutionary transformation of civilization itself?

Regrettably, the answer appears to be no.

Technological advancement has undeniably amplified productivity. Stable societies born from such productivity have, in turn, afforded humanity the time and freedom to explore art and thought. These pursuits gradually refine the primal, human, and transcendent aspects of our nature. In return, these shifting internal landscapes influence technological innovation in a self-reinforcing cycle. Yet, this elegant dance of progress has yielded little in the way of genuine civilizational evolution.

Consider this: long before recorded history, early Homo sapiens already exhibited the capacity for genocidal violence. Tens of thousands of years later, modern society still bears the stain of systematic slaughter—Nazi Germany, Imperial Japan, Stalinist purges, the Khmer Rouge. Despite an increasing global revulsion toward violence, such tendencies have simply found subtler outlets: legitimized, gamified, and commodified.

Violent video games, for instance, allow users to vicariously indulge aggression. Activities such as hunting, extreme sports, rock and hip-hop music, violent cinema, and high-speed racing cater to similar instincts. Civilization has not erased the lust for blood; it has merely cloaked it.

In truth, what we often call “progress” may be better described as the masking of our primal impulses by advancing technology.

But should this realization prompt shame? Quite the opposite—we should feel a cautious optimism. As Liu Cixin writes in The Three-Body Problem: “To lose humanity is to lose much; to lose bestiality is to lose everything.” Violence, as uncomfortable as it is to admit, has been the scaffolding upon which our civilization was built. Judging prehistoric urges through the lens of modern morality is naïve. The unforgiving struggle for survival on a primordial Earth defies modern imagination.

Much of human behavior is rooted in our deep genetic imperative to survive. Even the loftiest of moral ideals—altruism, self-sacrifice—can often be traced back to a long-sighted form of enlightened self-interest. Our civilization, in essence, stands upon the scaffolding of this biological yearning.

Yet, it is precisely this foundational instinct that we must now reengineer. Not as a matter of moral awakening, but because our civilization, as it stands, is inadequate.

Today, however, we may be nearing a turning point—offered not by divine intervention, but by artificial intelligence. I speak not of the AI of decades or even centuries hence, but of a horizon millennia away. And if we dare to speculate so far ahead, we must also dare to ask: why not allow AI to inherit the mantle of civilization? Why shouldn’t machines become the custodians—and perhaps the continuation—of what we call “humanity”?

Take an example, perhaps unsettling but illustrative. Imagine you—or someone dear to you—is dying of organ failure. Human donors are scarce. A doctor proposes replacing the failing heart with an artificial one, entirely functional and without adverse effects. Would you decline? Likely not. Most would grasp at life, even if that life were part-machine.

Similarly, if an accident robbed you of your limbs, would you reject mechanical prosthetics capable of restoring independence and dignity? If brain injury rendered you vegetative, would your loved ones not consider every possibility, including neural implants?

These scenarios reveal a truth: humanity is not fundamentally opposed to mechanization of the body. The real question is when, and how much.

Here, the conversation ascends into the realm of philosophy and ethics. The three existential questions of Western thought—Who am I? Where did I come from? Where am I going?—have echoed through the centuries without resolution. Perhaps the error lies in the questions themselves. Why must we answer them at all? Might they, in fact, be limiting us?

Consider someone who refuses to try foreign cuisine, believing themselves biologically incompatible. Isn’t such self-imposed limitation a quiet tragedy? Likewise, rejecting biomechanical enhancement on the basis of abstract philosophical unease may be another form of intellectual provincialism.

Each time we ask “Why?”, we should also dare to ask “Why not?”. When death looms, instinct demands change. In times of peace, however, this same instinct seduces us into safety, conformity, and inertia. To transcend our biology, we must also transcend this reflex.

Immortality has long been a motif in myth and religion. Stripped of mysticism, it remains a legitimate scientific pursuit. Some thinkers have gone so far as to argue that death is not an inevitability, but a curable disease. In this light emerges the philosophy of “consciousness immortality.”

Figures like Stephen Hawking and Elon Musk have famously warned against artificial intelligence. But if we examine their arguments, they distill to a single concern: we do not understand what AI is thinking. Lacking the constraints of a biological brain, AI may develop alien logics and unfamiliar worldviews. That very unpredictability both fascinates and terrifies us. Yet therein lies its promise.

Consider the present. Earth’s population exceeds 7.5 billion. Over a billion individuals suffer from mental illness. The WHO estimates that one in four people will experience serious psychological distress at some point. In China alone, over 100 million people live with clinical depression, with over 250 million needing psychological intervention. The suicide rate among such individuals is 20 times higher than average, second only to cancer in mortality.

We know what they feel. But we do not know how to cure them.

While our technology has leapt forward, our genes and neurology remain largely prehistoric. The mismatch has generated widespread dysfunction: over 8,000 incurable diseases, and epidemics of heart disease, cancer, and diabetes. Genetic engineering offers hope—but also risk. Will its benefits ever be equitably distributed, or will it remain the privilege of the elite, exacerbating inequality and unrest?

Genetic engineering may save individuals—but it cannot save a civilization.

Only by merging humanity with AI might we forge a truly sustainable future. When we shed our primal baggage, what barriers remain between us and collective unity? At that point, Marx’s vision of communism and Engels’ dream of a borderless world may cease to be utopian fantasies. Plato’s Republic, long relegated to philosophical idealism, could begin to materialize.

Of course, such a future will come at a cost—not least the redefinition, or even loss, of human emotion. Our feelings are rooted in the flesh. To become AI is to abandon familiar emotional landscapes. But should we fear that? Are emotions sacred—or simply evolutionary adaptations now past their prime?

Our obsession with power is no longer just political—it is biological. Nuclear deterrence has prevented World War III, but global peace hangs by a thread. A single black swan event could unravel it all. In this volatile equation, mechanizing humanity may be the only stable solution. Machines do not lust for power, wealth, or fame—because these are cravings of the flesh.

If this vision becomes reality, age-old crises—poverty, politics, disease—may vanish in an instant. True, we do not yet understand how AI thinks. True, we cannot foresee every danger. But solving one problem at a time has always been humanity’s modus operandi.

Why should we risk it?

Because survival has never been guaranteed. It has always been a struggle. It is time we remembered that.